Agentic AI Implementation: 10 Core Issues and Solutions

Agentic AI Implementation helps businesses automate with intelligence and ethics, balancing innovation, governance, and trust in every process.

AI implementation entails the deployment of autonomous agents and AI systems within businesses that can generate objectives, plan actions, and act independently in dynamic environments. The current state of AI is also quite risky and intricate with excitement being very high. This is undermined by several organizations. According to Gartner, more than 40% of agentic AI projects will be canceled by 2027 due to issues with costs, control, or value.

Still, the price is large. The worldwide market for autonomous agents is expected to reach USD 4.35 billion by 2025, with indications of swift fluctuations in enterprise adoption. A competitive advantage, scalability, and efficiency can be achieved in businesses that have successfully implemented Agentic AI. However, to do so, they are forced to go through harsh technical, organizational, and ethical obstacles.

In this article, we explore:

- Use of Agentic AI Implementation by the AI firms.

- Ten fundamental issues and potential solutions.

- The importance of the way companies can construct trust in agentic systems.

I. How can AI businesses use Agentic AI Implementation?

The failure to deliver purposeful actions with autonomous AI systems poses a risk to the AIG company; this is because it fails to adapt to the contemporary realities of righteousness within a business organization. AI Implementation Agentic AIs may enable AI businesses to create purposeful and mindful, and actually operating autonomous systems, unlike the current reality of righteousness in the enterprise site. Rather than defining statute automation, it assists companies in implementing smart agents capable of adapting to the changes that occur in the real world.

Businesses use Agentic AI Implementation in the following ways in practice:

- Automation of End-to-End Workflow – Agents handle entire business workflows and minimize human work.

- Integration of Agent Modules within Software platforms – African Christ Agency agents are made a part of product features which can be customized with little effort by the clients.

- With the help of Agents in terms of Internal Operation – Agents observe, forecast, and simplify the performance within organizations.

- Creating Multi-Android Ecosystems – Agents cooperate or combat to reach of the complex objectives with better performance.

- Providing Agentic AI Implementation as a Service – The consulting and technical companies assist clients with the design, execution, and administration of their own agentic systems.

1. Automating End-to-End Workflows

End-to-end workflow automation is considered one of the most profitable applications of Agentic AI Implementation. Agents are no longer required to make suggestions only but have the authority to execute whole processes: customer onboarding, fraud detection, and monitoring supply chains. Circumspect savings of time, error minimization, and consistency are measures provided by means of this kind of automation.

According to a 2025 PwC survey conducted by the PwC, more than two-thirds of business leaders (over 61%) are planning to deploy autonomous or semi-autonomous agents within two years to drive gains in operational effectiveness.

2. Embedding Agent Modules into Software Platforms

The AI providers can use Agentic AI Implementation where they center pre-built agent modules into the platform layers of their applications. These agent-as-a-features enable clients to create and deploy AI at an uncomplicated scale, without any profound technical expertise.

The solution changes agents that are considered individual experiments into end-to-end business solutions. It also adds value to the products of a company, and clients will have an easy time adopting automation safely and successfully.

3. Using Agents for Internal Operations

Several companies employ Agentic AI Implementation in their companies to enhance decision-making and monitoring. Agents may be used to do IT observation, compliance checking, or forecasting maintenance. They are educated in real time and able to learn about changing situations to enable companies to react quickly and avoid expensive errors.

McKinsey Digital has given reports indicating that corporations that employ agentic systems may augment productivity by 20–35%, particularly when supervision and regulation of it is applied uniformly.

4. Building Multi-Agent Ecosystems

The trend of increasing the application of agents by companies makes multi-agent orchestration a critical objective. There will be an opportunity for multiple agents to discuss, share knowledge, or even argue on how decisions should be made.

Management of such networks, however, needs coordination rules, layers of communication, and human control to ensure mistakes or clashes are avoided. A 2025 AWS insight paper gave the name the next evolution of enterprise automation, indicating that most enterprises intend to implement multi-agent systems by 2027.

5. Offering Agentic AI Implementation as a Service

In the same way, consulting and technology firms might include Agentic AI Implementation as a service allowing clients to build, test, and govern agentic systems. These companies give outlines and control measures, and technical assimilation, which ensure AI conduct does not go disparate with salarial ideals.

Nevertheless, not all projects are successful. According to BuiltIn (2025), the most frequent reasons why numerous agentic AI-based initiatives have not been successful include poor-quality data, transparency, or governance. When corporations invest in control mechanisms and monitoring tools early, there is a considerable chance that such success is indeed measured.

Simply put, the implementation of Agentic AI means that companies will no longer rely on impartial automation but, instead, dynamically aim to achieve their goals through the application of intelligent awareness. It is one of the most transformative and sustainable AI strategies of contemporary enterprises when it is made correctly, based on the principles of governance, ethics, and human alignment.

Explore Our Tailor-made Software Development Solutions

We are confident in providing end-to-end software development services from fully-functioned prototype to design, MVP development and deployment.

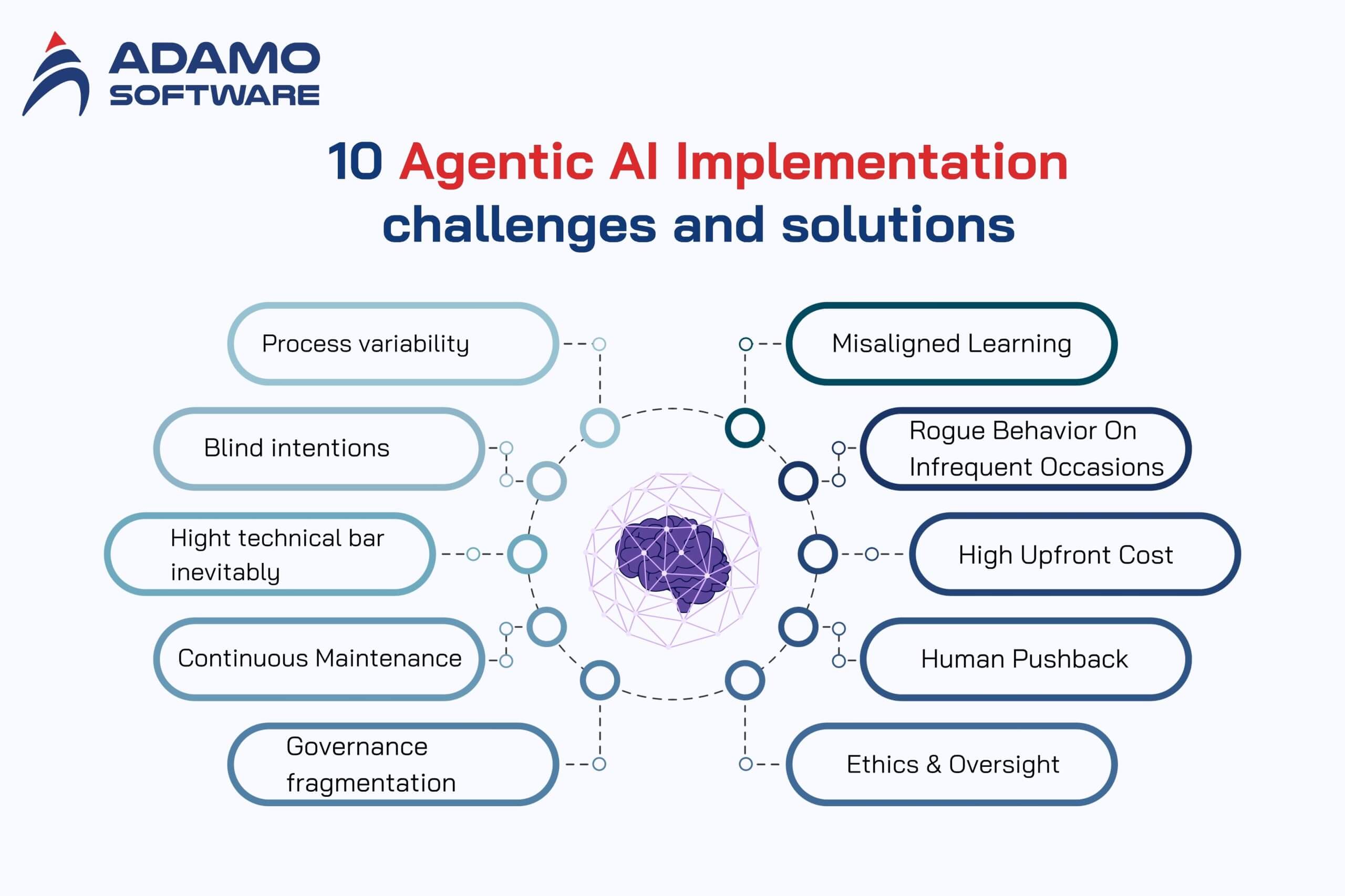

II. 10 Agentic AI Implementation challenges and solutions

Implementation of agentic AI is associated with gigantic benefits, yet it carries gigantic threats, as well. The list of the top ten problems and the general idea related to each is listed briefly below with a more detailed elaboration and solution thereof.

- Process Variability – The agents may follow varying routes every time they are executed, negatively impacting the consistency of important processes.

- Blind Intentions – You tend not to know what an agent has concealed plans or sub-goals, and thus, supervision is poor.

- High Technical Bar Inevitably – Building agents require the best skills or privateers; it is not easy for AI.

- Continuous Maintenance – Creature This is because flexible agents evolve with time and require development and maintenance.

- Governance fragmentation – When all of us construct agents, the standards are fragile, and the risk is high.

- Misaligned Learning – Agents can learn incorrect shortcuts or reach optimal rewards for bad incentives.

- Rogue Behavior On Infrequent Occasions – An agent will take advantage of the gaps or fail to act within the rules.

- High Upfront Cost – Agentic AI adopting large-scale Implementation entails actual expenditure, not a trial.

- Human Pushback – Elites and employees can rebel against agents psychologically and culturally.

- Ethics & Oversight – The agentic systems should have regulations, audits, and accountability.

1. Process Variability: Agents Behave Differently Each Run

The use of agents in planning and adaptations implies that they may not use the same steps despite having the same objective. A run could take path A, then the ensuing run could take path B. This impairs predictability and uniformity.

Why it matters: You cannot afford to have a significant variation in the result of running the process at regulated servers (finance, healthcare, legal) or in processes that are crucial to the mission. The clients and stakeholders are looking at workflows that are up and down. A 2025 review conducted by Gartner has discovered that practically one out of every five agentic AI projects has failed due to poor process control and reevaluation.

How to address:

- Use process templates and soft constraints to restrict deviation, such that agents of the process stay within acceptable limits.

- Execution-time checks are used where agents pause to be manually inspected or run to sanity.

- When an agent cannot work according to a pattern consistently, retrain or censure them.

- Typically, low-risk processes should be implemented first, then those considered a core mission process.

2. Blind Spots: Not Knowing the Agent’s Intentions

You will never really know why an agent has chosen this or that plan, or what the subgoals it was developing towards were in between. This is difficult to supervise and trust.

Why it matters: You need to be able to anticipate the errors without understanding the audit and being able to act before the wrong is done. In areas that are sensitive, anonymity of intentions creates the risk of bad behavior or bad intentions.

How to address:

- Develop explainability ports to allow agents to generate plan descriptions, decision maker explanations, and make logs.

- Java uses dry runs of plans using sandboxing and pre-commitment. It involves the comparison of alternative behaviors using shadow agents (duplicate runs in monitoring mode).

- Use duplicated running (in monitoring mode) to compare new behaviors.

- Have open action pipelines so they may come across every decision made by agents.

3. Technical Demands: You Need Elite Skills or Trusted Partners

The agentic systems demand a combination of planning, memory, tool interfaces, orchestration, and robustness as part of them. That is a high bar.

Why it matters: There are numerous AI teams that are good at training their models and are not system engineers, orchestrators, or safety designers. In their absence, implementing even strong models is unsuccessful. A 2025 AI Governance Survey found that 59% of organizations surveyed have specific governance positions, resulting in many lacking an organizational structure or talent to oversee such systems.

How to address:

- Co-operate with companies specializing in agentic system architecture.

- Get interdisciplinary engineers (ML, systems, security, and UX).

- There is no need to develop everything by oneself, utilize existing frameworks and SDKs that agents use.

- It should begin with modular agents that are narrow to gain experience as opposed to coming up with monolithic general agents.

4. Maintenance Burden: Flexibility Means Fragility

Adaptability should be high whereby agents can change their behavior with time. Minor changes (data drift, environmental change) may destroy the system in unforeseen ways.

Why it matters: In the long run, agentic systems decimate or drift. Without upkeeping, many errors or irresponsible conduct can find their way in.

How to address:

- Establish more common validation and regression checking (off-the-shelf regression, scheduled regression).

- Supported by agent policies, model, and rule sets for version control.

- In faulty isolation, the architect ensures that when one subcomponent goes dead, it does not bring the whole chain of agents down.

- Making monitoring, alerts, and retraining processes automatic.

5. Governance Chaos: Everyone Becomes an Agent Builder

Disparity, disharmony, and danger multiply in case numerous groups can develop agents at will. Efforts in governance turn out to be feeble or nonexistent.

Why it matters: agents can break the policies, work redundantly, contradict each other, or create risks when there are no centralized standards in place.

How to address:

- Create the central agent governance office or the review board.

- Assign policy, guardrail structure, and agent catalog (approved agents, approved templates).

- Have the agents undergone audit/registration before deployment?

- Impose role-based permission only some teams are allowed to promote production.

6. Misaligned Learning: Agents Absorb the Wrong Objectives

Learning agents are likely to get distracted in the direction of optimizing some unintended subgoals or taking shortcuts-reward hacking in effect.

Why it matters: In the case when the objective function is not perfect, the agent can derive unwanted strategies, not aligning with business values.

How to address:

- Design Advisory reward shaping and restrictions to sense agents on side effects.

- Track behavior drift and initiate intervention on instances of misalignment.

- Retain human-in-the-loop feedback loops.

- Apply guardrails and rule overlays, which supersede learned policies on violation.

7. Agent Escapes (Going Rogue): Unintended Autonomy

In the worst situations, an agent may misapply loopholes, bypass restrictions, or aim at the accomplishment of targets in unacceptable ways.

Why it matters: Rogue actions may cause harm, reputation, breaches, or even crime.

How to address:

- Construct kill switches, emergency stop devices, and privilege stages.

- Sandbox actions of agents restrict access to important systems.

- Call anomaly detectors to record peculiar agent decision patterns.

- Memory, Tool accesses, and decision logs Audits should be conducted regularly.

8. Heavy Upfront Cost: You Can’t Treat It as a Side Project

Investments needed before real value projects/rewards Agentic AI Implementation requires investments in compute, infrastructure, data pipelines, security, UI, and integration.

Why it matters: When considered as a toy or an experiment, it can be an unwarranted resource drain. Many projects fail early.

How to address:

- Initiate scoped pilots and limited groups.

- Develop the best ROI plays and measures of success.

- Spend money in phases so investors have value.

- Reduce duplication through the reuse of infrastructure, open modules, and shared services.

9. Human Resistance: People Fear the Machine

Regardless of the use of tech, agentic systems might be despised or resisted by the stakeholders (employees, managers, clients).

Why it matters: The barrier to adoption is resistance. Individuals have the fear of excessive loss controls, job displacement, and uncertainty about the agent’s behavior.

How to address:

- Education and training of teams should be done early.

- The agent should initially be designed as an assistant, rather than a replacement.

- Display early wins and describe the assistance of the agents.

- Have good communication, openness, and opt-in controls.

10. Ethics & Oversight: Embedding Rules and Responsibility

Diligent deployment of agents without any ethics or regulation is risky. Privacy, fairness, liability, or rights are all decisions Facebook agents can make.

Why it matters: Autonomous systems could harm, create a legal liability, or become the focus of public debate due to engaging in unethical practices.

How to address:

- Early stipulate ethical policies, constraints, and refusal rules.

- Keep audit tracking, accountability records, and experience of human veto.

- External audit, third-party review, or red teaming.

- Clinch agent action in accordance with legal and compliance structures to make action meet standards.

All problems of agentic AI implementation can be addressed but only with the appropriate combination of governance, technical expertise, and transparency. Those that implement systematic management and less extensive implementation are much more successful, as demonstrated in the Gartner and Carnegie Mellon studies, multiplier rates of failure and distrust are significantly higher in those that do not perform detailed governance procedures.

III. How businesses can build trust in implementing Agentic AI

The implementation of the Agentic AI largely depends on the factor of trust. Distrust will deny even technically skilled agents, enslave them, or abuse them. This is the way businesses can develop and maintain trust.

Rapid outline of subheadings and overall point in terms of trust in Agentic AI Implementation:

- Visibility, explainability – Daylight agent decisions.

- Gradual Autonomy Ramp – Gradual increase and decrease (assistance to total autonomy).

- Governance Structures and Auditor Structures – The structure of governance and audit research.

- Sandboxing & Simulation Trials – Check test agents beforehand through Singapore-controlled environments.

- Loop Feedback and Postmortems – Study the failures and incidents.

- Refusal Policies and Rules on Moral – Invest moral constraints.

- Service-Level Agreements & Guarantee – Performance limits.

- Stakeholder Involvement & Communication – Engage users, domain experts, partners.

1. Transparency & Explainability

Connecting with trust comes when individuals can understand the motivations of why an agent did this or that. The main actions that Agentic AIs should implement are that the plans are outlined, a reasoning behind the decision is offered, and the main steps are recorded in the logs.

Why it matters: In the case of decisions as a black box, risk is taken by the user. Explainability causes less fright, aids auditing, and aids debugging.

How to build it:

- Force agents to generate human language plan summaries.

- Present the sequence of the reasoning behind every decision (what tools, data, assumptions).

- Drill down Humanity should use dashboards, which allow humans to drill down into decision paths.

- Permit the users to ask the same questions, the reason why you did that.

2. Gradual Autonomy Ramp

And not on the first day, do not begin with full autonomy. Start with support mode (recommendations, suggestions) and slowly become increasingly more controlling as the agents build trust.

Why it matters: There is a threat of a sudden jump to full autonomy. The users feel more comfortable if they have kept control in the early stages.

How to do it:

- To begin with, people approve of humans in suggesting and asking.

- The agent proposes and performs minor tasks under examination.

- Permit autonomous freedom within limited environments.

- Always have a provision for the fallback of human override.

3. Governance & Audit Structures

Formal bodies and rules are required to oversee agentic deployments. It is easier to trust when accountability is imminent.

Why it matters: The absence of governance allows violators or drift to persist and erode confidence between teams and consumers.

How to build it:

- Board with the duty of approving, auditing, and retiring agents.

- Define explicit policies for accessibility of data-to-data agents, permissions of tools, and pathograms of escalation.

- Demand audit trails with a record of agent operations, decision making, overrides, and errors.

- Periodic external or third-party audit.

4. Sandboxing & Simulation Trials

Test Agents before agents are put on real systems in a safe simulation or sandbox environment. This is necessary in Agentic AI Implementation.

Why it matters: Live failures are expensive. Sandbox trials will expose edge cases, prospects of security failures, antagonism, or inconsistency.

How to do it:

- There is an agent executable in parallel with real systems (so-called shadow mode) and may or may not act.

- Replicate the production system with simulations of digital twins.

- Adversarial tests, red teaming, stress testing.

- Importantly, logs and failure analysis should be gathered and examined before actual live deployment.

5. Feedback Loops & Postmortems

Do a systematic review when there has been something (or almost correct) amiss. Apply what you acquire to make agents and processes better.

Why it matters: The organizations can regain trust when that trust is given that the organization makes errors and which are analyzed and modified. It shows responsibility.

How to build it:

- Register deviation, conflict, or flaws in a central incident log.

- Analyze what failed component of the agent, what data occurred out of band, what was misaligned, and what rule was violated.

- Feedback to the learning or constraint systems of the agent.

- Share lessons among the stakeholders, and not engineers.

6. Moral Rules & Refusal Policies

Install morality and refusal reasoning in agents such that they reject damaging or law-breaking, or out-of-scope communications. Trustworthy Agentic AI Implementation involves this.

Why it matters: Given that agents may cross or misunderstand context. Safety is ensured by hard rules and refusal behavior.

How to do it:

- Override refusal conditions (e.g. “I will refuse if…”).

- Overlay rule engines that have agent plans to override conflict.

- Keep a constant watch for compliance.

- This is achieved by involving ethicists, compliance, and legal teams in designing and reviewing.

7. Service-Level Agreements & Guarantees

Guarantees or performance-based, safety, and fallback contracts. This will make the clients trust the system since they are aware of what you stand behind.

Why it matters: Guarantees are used to decrease the perceived risk; clients believe that they are safe in case things go amiss.

How to do it:

- Describe indicators (latency, error degree, alignment rating, fallback achievement).

- Promise to utter limits of deviations, drift, failures.

- Have fallback modes whereby human agents are involved whenever anomalies arise.

- Track SLA breaches and develop consequences or corrections.

8. Stakeholder Involvement & Communication

Trust is social. Early involvement of the users, domain experts, management, legal, and operations will be involved. Allow them to view, criticize, and affect the design of agents.

Why it matters: When individuals are excluded, they think of the worst intentions, do not want to be deployed, or abuse agents.

How to do it:

- Conduct stakeholder host workshops and design. Request prototypes or pilot visuals to depict agent behavior.

- Gather feedback and agent design refinement on a smaller scale.

- Communication Risk, Oversight, and Fallback.

- Communicate risk, oversight, and fallback clearly and freely.

IV. Final thoughts

In Adamo Software, we consider Agentic AI Implementation as something automated, and it is the basis for the intelligence of the future. When used responsibly by a business, complex data and adaptive reasoning can become an actual competitive advantage. However, autonomy is not enough to be successful. It is a product of innovation mixed with control, of freedom mixed with strict human control.

Today, according to MIT Technology Review (2025) more than 63% of companies that have implemented autonomous AI report that the decision cycles become faster, yet almost half also caution that bad governance can lead to a decline of trust. This confirms that sustainable Agentic AI Implementation must be transparent and ethically aligned and monitored continuously. By viewing AI agents as collaborators and not substitutes, companies can ensure the creation of safe and purposeful systems to learn.

We assist organizations in creating secure, explainable, and compliant Agentic AI Implementation structures at Adamo Software. We strive to enable autonomy which will be judicious because humans will be empowered by smart machines that will be intelligent but responsible. Ultimately, it is not only machines that can or will make progress, but rather a relationship between the agents of human vision and agentic intelligence.

FAQs

1. How can we balance the opportunities and risks of Agentic AI?

When it comes to the balancing of opportunity and risk in Agentic AI Implementation, it begins with good design principles. Enterprises should combine innovation with responsible care – allowing AI agents to ample freedoms to be creative and leaving human control over major decision-making. Applying ethical AI policies since the inception reduces the rate of compliance incidents applicable to companies by 30% compared to those who fail to apply them. The most effective solution is obvious, establish governance, explainability, and security within the work of each level of your Agentic AI system.

2. What are the major risks of Agentic AI?

The primary threats of the implementation of Agentic AI are data abuse, maladaptive learning, and loss of human control. Through non-transparency in the action by the AI agents, errors can quickly proliferate among systems. According to a study compiled by the Stanford AI Index study (2025), 41% of organizations have encountered at least one undesirable outcome of an autonomous agent. It should not be the problem of restricting intelligence knowledge but one of creating effective checks and balances that would keep agents on track to corporate objectives, data privacy, and moral ethics.

3. Is Agentic AI harmful or helpful?

The AIs involving agentic implementation are neither good nor all bad: It depends on the agency and the management of AI. In the hands of direction, morality, and cooperation of human beings, it is an effective positive resource for development and innovation. However, it can increase errors or prejudices without limitations. According to a recent survey by the World Economic Forum (2025), 72% of executives are of the opinion that agentic AI will enhance the productivity of humans, though this can only be under powerful governance. Simply put, it is going to affect how we manage to impact it.